Wan 2.1: The Game-Changing Open Source Video Generation Model

Posted on February 26, 2025 - News

Hey there! I've been playing with the new Wan 2.1 model recently, and honestly, it's blown me away. Let me tell you everything about this incredible open-source video generation tool that's making waves in the AI community.

What is Wan 2.1?

Wan 2.1 is a comprehensive open-source suite of video foundation models that's pushing the boundaries of what's possible with AI video generation. It's developed by the Wan team and available on GitHub.

I first discovered it while looking for alternatives to expensive commercial video generation solutions. What caught my eye immediately was how it outperforms many closed-source models while being completely free and open.

The model comes in different sizes:

- A lightweight 1.3B parameter version that runs on consumer GPUs

- A more powerful 14B parameter version for professional results

What makes Wan 2.1 special is its versatility. It can handle:

- Text-to-Video generation

- Image-to-Video conversion

- Video editing capabilities

- Text-to-Image creation

- Video-to-Audio features

Perhaps the most impressive thing about Wan 2.1 is that it's the first video model that can actually generate both Chinese and English text within videos. This feature alone makes it incredibly useful for creating content with text overlays.

Getting Started with Wan 2.1

Installing Wan 2.1 is pretty straightforward. You'll need to:

- Clone the GitHub repository

git clone https://github.com/Wan-Video/Wan2.1.git

cd Wan2.1

- Install the dependencies

pip install -r requirements.txt

- Download the model weights (they offer several options depending on your needs)

The best part? The T2V-1.3B model only requires about 8.19 GB of VRAM, which means it can run on almost any consumer GPU. I've been using it on my RTX 4090, and it can generate a 5-second 480P video in about 4 minutes.

Text-to-Video Generation with Wan 2.1

The text-to-video capabilities of Wan 2.1 are where it really shines. I've tried several prompts, and the results are impressively consistent.

To generate a video using the 14B model, you'd use:

python generate.py --task t2v-14B --size 1280*720 --ckpt_dir ./Wan2.1-T2V-14B --prompt "Two anthropomorphic cats in comfy boxing gear and bright gloves fight intensely on a spotlighted stage."

If you're working with limited GPU memory like I was initially, you can use the 1.3B model with some memory optimization flags:

python generate.py --task t2v-1.3B --size 832*480 --ckpt_dir ./Wan2.1-T2V-1.3B --offload_model True --t5_cpu --sample_shift 8 --sample_guide_scale 6 --prompt "Two cats boxing"

One thing I've found that significantly improves results is using the prompt extension feature. This automatically enriches your prompt with more details, resulting in higher quality videos. You can use either the DashScope API or a local model for this.

Image-to-Video: Bringing Photos to Life

I've been particularly impressed with the Image-to-Video capabilities. It's like magic seeing still photos suddenly come alive with movement.

To convert an image to video, use:

python generate.py --task i2v-14B --size 1280*720 --ckpt_dir ./Wan2.1-I2V-14B-720P --image examples/i2v_input.JPG --prompt "Summer beach vacation style"

The model preserves the aspect ratio of your original image while adding natural, coherent motion. I've tried it with landscape photos, portraits, and even abstract art - the results are consistently good.

Why Wan 2.1 Stands Out from Other Models

After testing various video generation models, I can confidently say Wan 2.1 has several advantages:

- Performance: It consistently outperforms other open-source models and even some commercial solutions

- Accessibility: The 1.3B model runs on consumer hardware, making advanced video generation accessible to everyone

- Versatility: From text-to-video to image animation, it handles multiple tasks well

- Text Generation: Being able to generate readable text in videos is a huge advantage

The technical innovation behind Wan 2.1 is impressive too. It uses a novel 3D causal VAE architecture called Wan-VAE that can handle videos of any length while preserving temporal information.

Common Questions About Wan 2.1

What GPU do I need to run Wan 2.1?

For the 1.3B model, any GPU with 8GB+ VRAM should work. I've tested it on an RTX 4090, but it should run on cards like the RTX 3060 Ti or even older models.

Can Wan 2.1 generate long videos?

Yes! The Wan-VAE architecture can encode and decode unlimited-length 1080P videos without losing historical temporal information.

Is Wan 2.1 free to use?

Absolutely! It's released under the Apache 2.0 license, which means you can use it freely, even for commercial projects.

How does Wan 2.1 compare to commercial models like Runway or Pika?

Based on the developer's evaluations, Wan 2.1 outperforms both open-source and many closed-source models in quality. I've found it produces results comparable to commercial solutions in many cases.

Final Thoughts

Wan 2.1 represents a significant step forward for open-source video generation. It's democratizing access to high-quality video creation tools that were previously only available through expensive commercial services.

Whether you're a content creator, developer, or just someone interested in AI, Wan 2.1 is definitely worth checking out. The combination of performance, accessibility, and versatility makes it one of the most exciting developments in the generative AI space this year.

Have you tried Wan 2.1 yet? What kinds of videos have you created with it? I'd love to hear about your experiences!

Related Posts

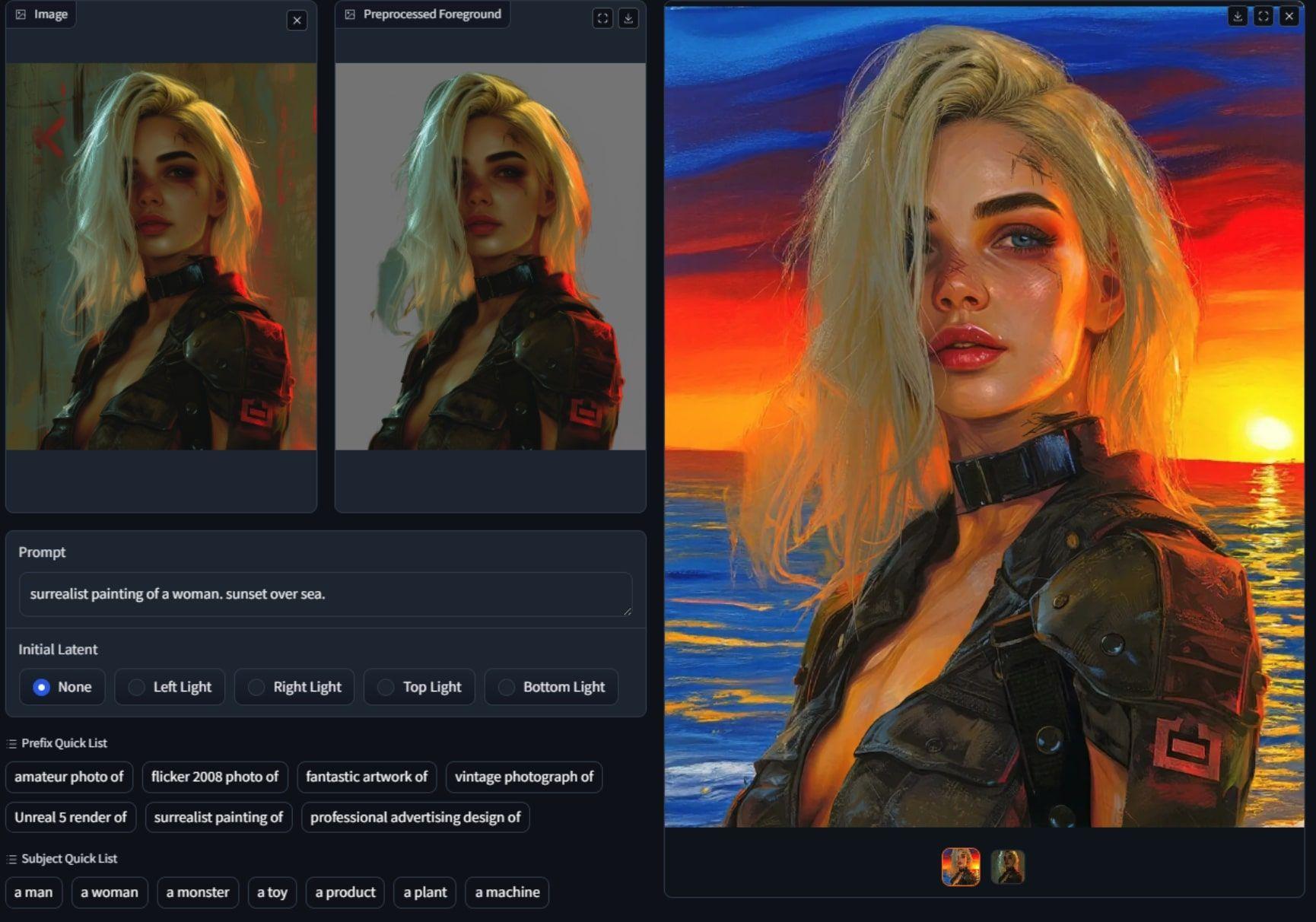

IC-Light V2 Update: Game-Changing AI Detail You'll Love

Discover the groundbreaking features of IC-Light V2 in this complete guide! Learn how this AI image model enhances detail preservation, respects your unique style, and outperforms previous versions like Stable Diffusion.

Getting Started with Recraft V3: The AI Image Tool That's Actually Easy to Use

Discover how Recraft V3 beats DALL-E & Midjourney at image generation. Get my exact prompts + see real results from 50 free daily credits 🎨

BlackForestLabs' FLUX.1 Tools: A Game-Changing Update to Their AI Image Platform

🔥 BlackForestLabs Just Broke the AI Image Game - See The Tool That's Making Midjourney Sweat!

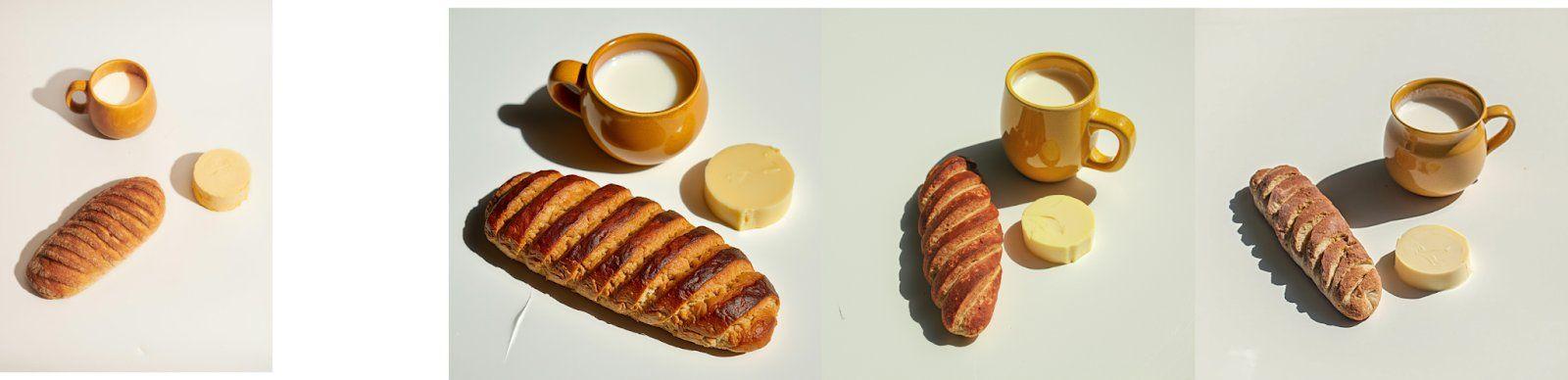

Ruyi-Models: Turn Still Images into Cinematic Videos

Learn how to transform still images into cinematic 24fps videos with Ruyi-Models, an open-source AI tool. This guide covers installation, usage tips, and best practices for generating high-quality 768p videos from images.