New CLIP-L Model for Flux.1

Posted on March 14, 2025 - General

Hey there, fellow AI enthusiasts! If you're anything like me, you're always on the lookout for the latest and greatest tools to take your text-to-image generations to the next level. Well, buckle up because I've got some exciting news that's going to blow your mind!

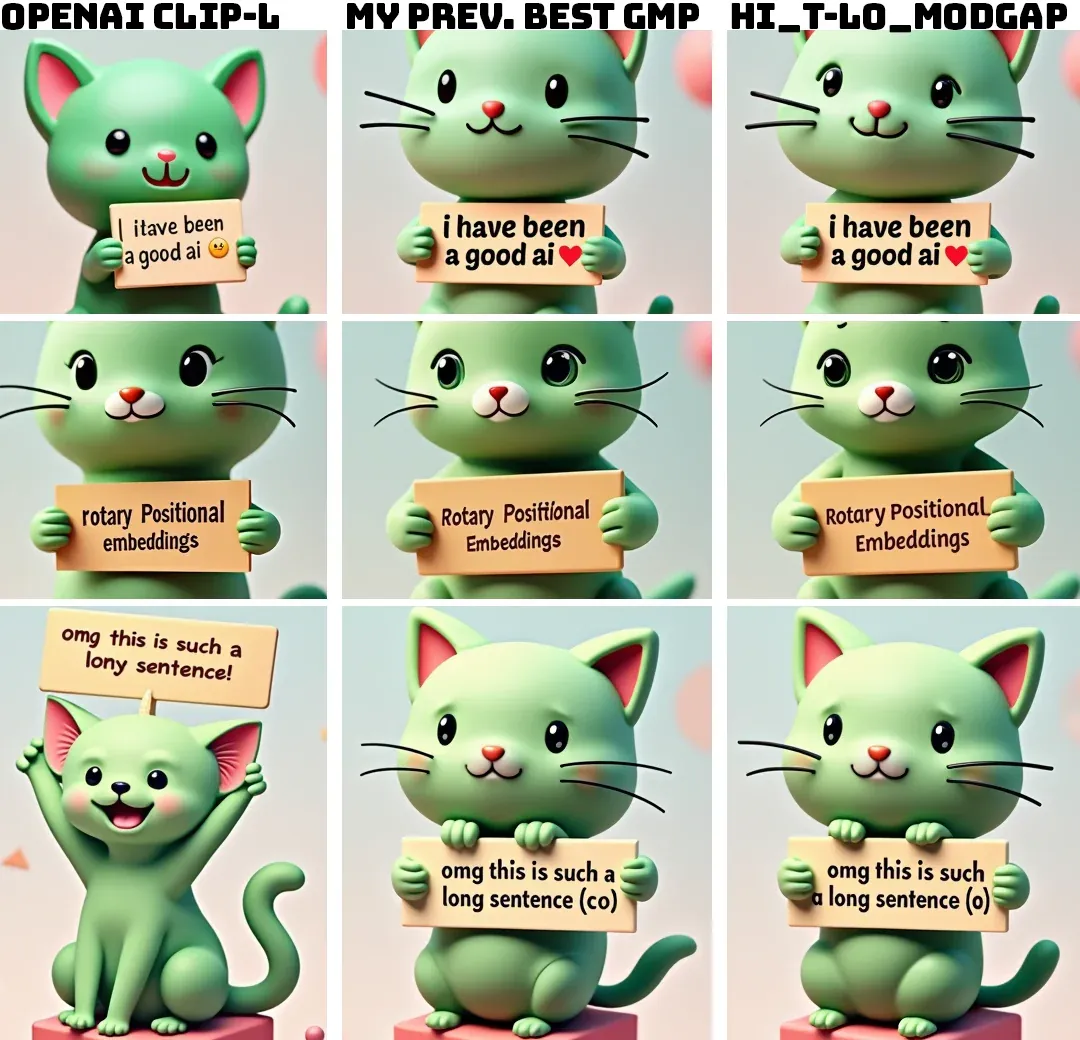

Recently, a brilliant mind in the AI community, zer0int, has released a game-changing update to the CLIP-L text encoder. This new model, fine-tuned for Flux.1, boasts improved text adherence and detail accuracy. In other words, your generated images are about to look sharper and more true to your prompts than ever before!

But wait, there's more! zer0int has also provided a handy-dandy .safetensors download, making it a breeze to integrate this model into your workflow. Whether you're using Stable Diffusion, Flux, or any other text-to-image generator, this CLIP-L update is a must-have.

You can download the model: https://huggingface.co/zer0int/CLIP-GmP-ViT-L-14/tree/main

Now, I know what you're thinking: "But how does it compare to the Long-CLIP model?" Well, zer0int has got you covered there too. In a nutshell, the CLIP-L shines when it comes to shorter prompts and text-heavy tasks, while Long-CLIP excels at handling more elaborate scenes. It's like having a Swiss Army knife of text-to-image models!

So, what are you waiting for? Head over to the Hugging Face repository, grab the ViT-L-14-TEXT-detail-improved-hiT-GmP-TE-only-HF.safetensors file, and start generating some mind-blowing images! And if you're feeling generous, consider tossing a few bucks zer0int's way to help keep those AI critters fed and happy. After all, they're working hard to make our dreams of photorealistic images a reality!

FAQs

-

What is the difference between ViT-L-14-TEXT-detail-improved-hiT-GmP-HF.safetensors and ViT-L-14-TEXT-detail-improved-hiT-GmP-TE-only-HF.safetensors? The smaller file, ViT-L-14-TEXT-detail-improved-hiT-GmP-TE-only-HF.safetensors, contains only the text encoder, which is all you need for text-to-image generation. The larger file, ViT-L-14-TEXT-detail-improved-hiT-GmP-HF.safetensors, includes both the text encoder and the vision transformer, which is useful for other tasks but not necessary for generative AI.

-

How do I use this CLIP-L update in my text-to-image workflow? Simply download the ViT-L-14-TEXT-detail-improved-hiT-GmP-TE-only-HF.safetensors file, place it in your models/clip folder (e.g., ComfyUI/models/clip), and select it in your CLIP loader. It's that easy!

-

Will this CLIP-L update work with my existing Stable Diffusion or Flux models? Absolutely! This CLIP-L update is compatible with SD 1.5, SDXL, SD3, and Flux. These models all use the stock CLIP-L by default, so you can seamlessly integrate this improved version into your workflow.

Conclusion

There you have it, folks! With zer0int's CLIP-L update, the sky's the limit when it comes to your text-to-image generations. Whether you're a seasoned pro or just starting out, this model is sure to take your creations to new heights. So, what are you waiting for? Get out there, experiment, and let your imagination run wild!

If you're looking to refine your images even further, you can also sharpen image online with easy-to-use tools that enhance clarity and detail.

Your generated images are about to look sharper and more true to your prompts than ever before!

Citations: https://www.reddit.com/r/StableDiffusion/comments/1f83d0t/new_vitl14_clipl_text_encoder_finetune_for_flux1/ https://www.youtube.com/watch?v=gCzJI4sKr1I&t=1s

Related Posts

Web UI vs Comfy UI: How Should AI Painting Beginners Choose?

This article compares and contrasts two popular tools used for AI image generation - Web UI and Comfy UI. It explores their similarities, backgrounds, advantages, and disadvantages to help beginners in AI painting decide which tool might be more suitable for their needs.

FluxGym: Low-VRAM Training GUI for flux lora

FluxGym is an open-source web UI that enables users to train Flux LoRAs on computers with LOW VRAM (12GB/16GB/20GB) support, making advanced fitness technology accessible to all.

Pollo.ai Review: The All-in-One AI Video & Image Generator That Saves Money

Discover how Pollo.ai combines multiple AI models in one platform, saving you time and money. Create stunning videos and images without technical skills or multiple subscriptions. Try it today!